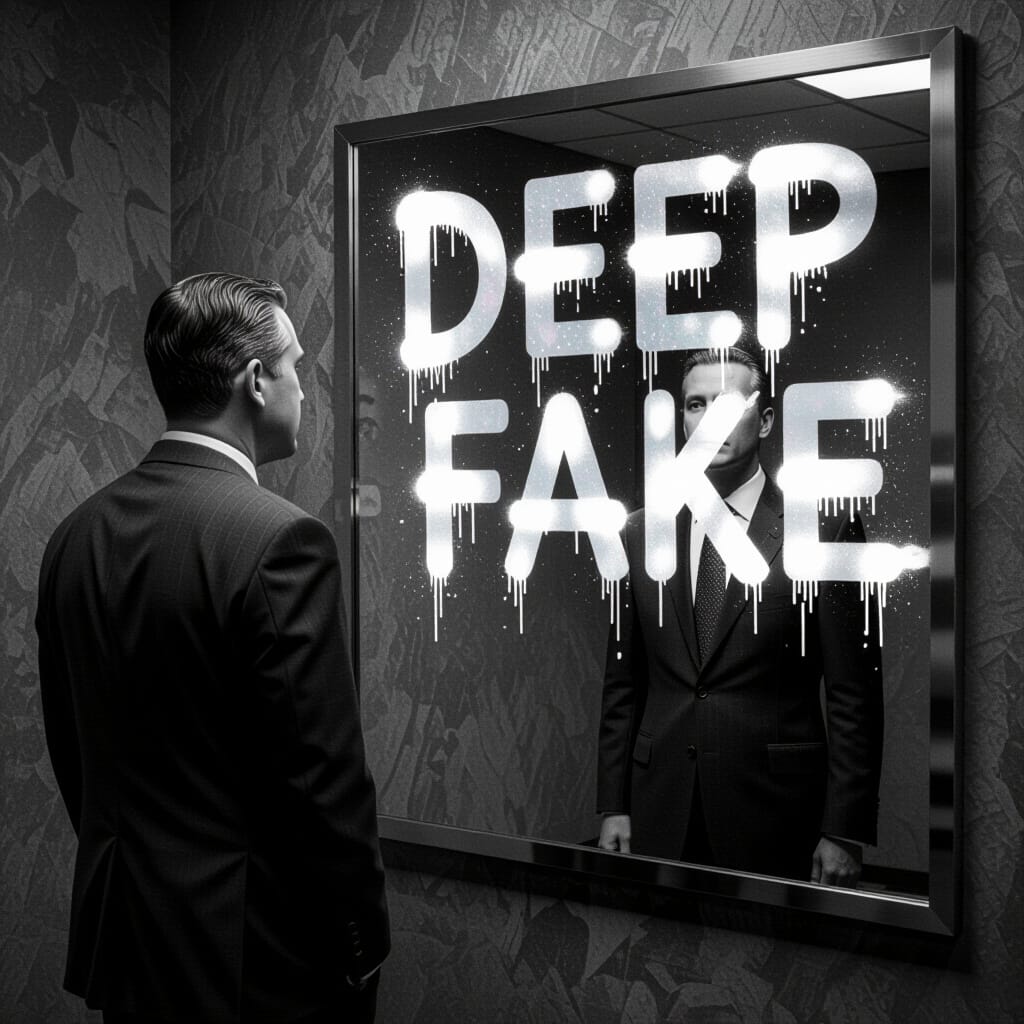

AI is continually getting better at creating “deepfakes” of real people. A deepfake is an AI-generated image or video that depicts real people saying and doing things they did not actually do. Such deepfakes have fooled viewers into believing the scenes depicted to be real. Many are understandably alarmed about these developments in AI and fear the implications for the future—the political chaos and societal upheaval deepfakes may cause. While such negative outcomes are a worrisome threat, there is an upside to deepfakes as well.

With the inevitable proliferation of deepfakes online, people will no longer believe any image or video they see to be real—even the real ones. This is actually a good thing. People need to be more discerning and skeptical of the media (both professional media and social media). Any single image or video, even if real, is never the whole story.

Well before AI deepfakes, the media would use real images/video selectively chosen and edited in order to promote a particular narrative. Viewers were too quick to believe everything on television without realizing that what they saw was deliberately chosen, edited, and framed in a certain way to promote a particular narrative.

A simple example of this is the images of political figures that appear in the media. If that media source is biased against that figure, they will choose an unflattering image that makes the person look bad. If that media source is biased in support of that figure, they will choose a flattering image that makes the person look good. The “photos” are usually still frames from a video, paused at the precise moment to make the person look as bad as possible. It is a propaganda technique used to frame the viewer’s perception of the figure being depicted. Both sides do this all the time. Once you notice the technique, you can’t unsee it. So now when I see an image of a public figure making a disparagingly awkward face (when there are literally thousands of other photos they could have chosen), I become highly skeptical of the following story about that person, knowing there will be at least some bias involved. The media is not outright lying in such cases (the photos are real); they are simply framing objective things in a certain way to make you believe their subjective narrative.

People need more skepticism toward “real” images/videos today. They needed it since the invention of photography. Before that, there were only political cartoons in the newspaper, which people could obviously recognize were created by someone with a particular point of view. Deepfakes will force more media skepticism onto the average person—which is sorely needed. “Fool me once, shame on you; fool me twice, shame on me.” After the umpteenth time of getting fooled by a deepfake story on the internet, people will hesitate before believing the next story they see. This will result in people disbelieving some true stories, but I would argue that default skepticism is healthier than taking everything at face value (at least online).

Rather than instantly reacting to the “Current Thing” trending on social media, people will reserve judgment on most issues and demand more evidence for any given claim. With a higher standard for truth, society will become more reality-based overall. That is the possible long-term silver lining to the short-term chaos deepfakes will surely cause.